Quickwit 0.4: Distributed indexing with Kafka, K8s-native, and more!

Today, we are proud to announce the release of Quickwit 0.4. More than 600 pull requests have been merged into the project since our last release six months ago. Notably, some significant contributions have come from the community. This version includes the following new features:

- Distributed indexing with Kafka

- Native support for Kubernetes and Helm chart

- Index partitioning

- Support for deletions

- Retention policies

Distributed indexing with Kafka

While Quickwit can comfortably ingest more than 2 TB of data per day on a single node with modest hardware, the project aims to enable full-text search on unlimited volumes. In this release, we focused on Kafka-based ingestion and relied on Kafka consumer groups to consume Kafka topics and index data on multiple nodes. We thank @kstaken for helping us design, implement, and test this work. Quickwit will ship with a control plane in the next release and support distributed indexing generically, regardless of source type.

Native support for Kubernetes and official Helm chart

Since the last release, we have extensively tested Quickwit on Kubernetes. We are happy to announce that we now officially support Kubernetes as a deployment platform. In addition, @clemcvlcs and @mkhpalm put a lot of effort into creating a comprehensive Helm chart that makes it straightforward to install, configure, and upgrade Quickwit on Kubernetes.

Index partitioning

Index partitioning routes documents into isolated splits depending on a field or a set of fields. On the search path, this allows for more efficient split pruning and faster queries. For instance, an organization deploying multiple applications on multiple clusters can decide to partition a Quickwit index on the “app” field by declaring a partition key in the doc mapping of the index configuration:

version: 0.4

index_id: acme-inc-logs

doc_mapping:

field_mappings:

- name: app

type: text

tokenizer: raw

- name: dt

type: datetime

- name: severity

type: text

tokenizer: raw

fast: true

- name: message

type: text

partition_key: app

[...]

Support for deletions

Quickwit now supports deletes thanks to the new delete API. This feature mainly exists to help users who must comply with regulations such as CCPA (California Consumer Privacy Act) or GDPR (General Data Protection Regulation) and should be used parsimoniously as deleting large amounts of data in Quickwit remains an expensive operation.

Boolean, datetime, and IP address fields

We’ve added some new field types to natively handle booleans, datetimes, and IP addresses. Find out more about those new fields in our documentation.

Retention policies

Retention policies configure how long data is retained on object storage before being deleted. They are defined in the index config under the retention section. The period parameter expresses for how long the data is kept whereas the schedule parameter specifies how often the policy is evaluated:

version: 0.4

index_id: acme-inc-logs

doc_mapping:

[...]

retention:

period: 7 days

schedule: hourly

Increased observability with Prometheus metrics and pre-built Grafana dashboards

We have added numerous Prometheus metrics to track the performance and stability of Quickwit in production and help troubleshooting issues. They can be scraped at /metrics or explored in Grafana with our ready-to-use dashboards.

REST API for creating indexes and adding sources

Until now, users had to use the Quickwit CLI to create and delete indexes or add and remove sources. Quickwit 0.4 exposes some new REST API endpoints to do so directly with your favorite HTTP client.

Better under the hood

Metastore improvements

The use of index partitioning generates a lot more splits, and during our tests, we observed that the pressure on the metastore had increased. Although the load was manageable, we proactively optimized some operations (batching) and rewrote some queries to regain some headroom. We’ve also identified more optimization opportunities (data model, indexes) for the next release.

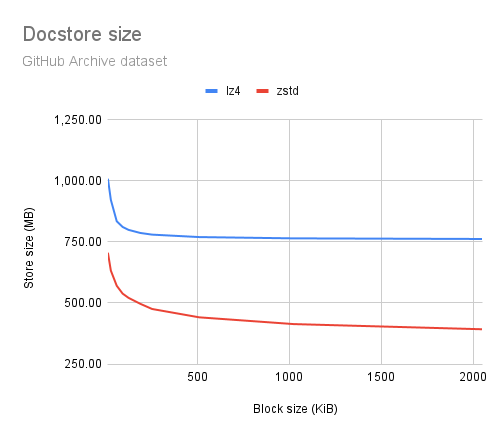

Compression gains

We’ve switched the compression algorithm used in the “docstore” (tantivy row-oriented document store) from LZ4 to ZSTD. We also increased the default block size from 16 KB to 1 MB. Depending on the dataset, we’ve observed some substantial compression gains ranging from 25% to 60%. ZSTD is a slower compression algorithm, but we also moved the docstore compression logic in a dedicated thread out of the critical path, and the change didn’t impact time to search. The block size and compression algorithm used in the docstore remain configurable for users with specific constraints or requirements.

What’s next?

These are the features on our roadmap for Quickwit 0.5:

- Distributed and replicated ingestion queue (ingest API)

- Tiered storage (local drives, block storage, object storage) and transition policies

- Transforms using Vector Remap Language (VRL)

- Native support for OpenTelemetry exporters (logs and traces)

- Jaeger integration

- Grafana data source

We plan to deliver this next version of Quickwit in Q2 2023. Our roadmap is public, and you can follow our progress in this GitHub project.

In the meantime, we invite you to check out this quick start guide to ingest your first dataset with Quickwit.

Finally, if you have questions regarding Quickwit or encounter any issues, feel free to start a discussion or open an issue on GitHub or contact us directly on Discord.