Quickwit 0.8: Indexing and Search at Petabyte Scale

Quickwit, the OSS Cloud Native Search Engine for Logs and Traces, now scales to petabytes of data!

We're excited to unveil Quickwit 0.8! Compared to previous versions, this version brings a rather short list of new "visible" features. Most of the improvements are in the invisible realms of scalability, efficiency, and performance.

In the last few months, we have been working hard with Quickwit customers, partners, and open-source users on different use cases presenting challenges.

Together, these use cases have stretched Quickwit's capability in:

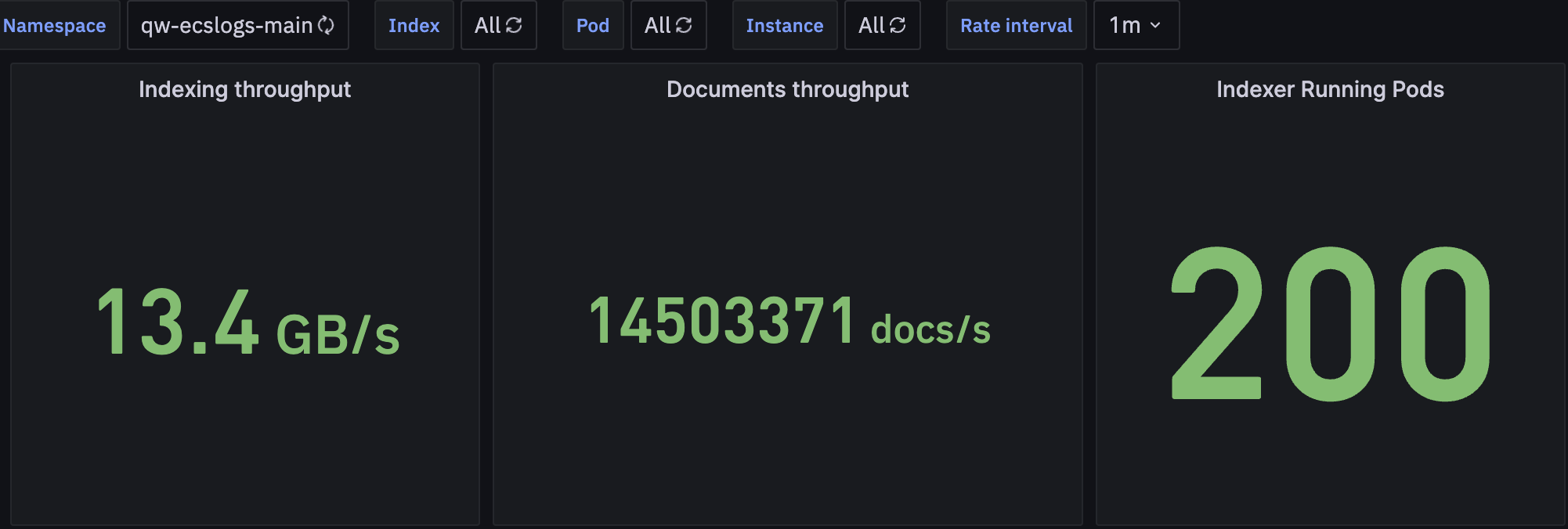

- number of nodes (up to 200)

- indexing speed (up to 1PB/day, or 13GiB/s)

- number of indexes (up to 3,000)

- and total amount of searchable data (several PBs of data).

In the process, we uncovered a chain of unexpected bottlenecks and inefficiencies that we tackled one after the other.

Thanks to these efforts, Quickwit has reached a significant milestone: Quickwit is now running at petabyte scale!

On the visible side, we have also greatly enhanced our Grafana plugin to deliver a seamless search experience within Grafana Explore and Dashboards. It packs features like autocomplete hints, a context query editor, and support for ad-hoc filters. The new plugins will require you to run Quickwit 0.8.

Last but not least, we're introducing our distributed ingest API in beta and looking for feedback from our users.

Let's unpack this before looking at what's coming next.

Achieving 1 PB/day in Indexing

Scalability has always been a primary focus at Quickwit. Unfortunately, we do not have the data or the finances to play with petabytes. Our largest tests typically stop at 400MB/s of indexing throughput and 100TB of data overall. Quickwit handles this amount of data like a champ, so we were expecting Quickwit to behave properly at a petabyte scale, but we never had the chance to confirm that.

We stayed cautious and resisted the sirens of modern marketing. No premature claims. We would only call Quickwit petabyte-scale when someone has a Quickwit cluster in production with that data.

This is now done!

Our largest user reached an indexing throughput of over 1 PB per day, equivalent to 13.4 GiB/s or 14 million docs/s, using 200 pods, each equipped with 6 vCPUs and 8GB of RAM.

Searching the needle in petabytes of data

It is one thing to index at 1 PB/day; it is another to search through the amassed data, which can quickly reach tens of petabytes. Once again, on paper, Quickwit is supposed to scale well, but we had never tested Quickwit above 100TB.

The largest Quickwit production search cluster has around 40 PB of uncompressed logs, over 10 indexes, 50 trillion (5x10¹³) log entries, and an index of 7.5 PB stored on S3. User feedback has been very positive. With 30 beefy searcher pods, users are very happy with the search performance.

Reaching 300 search QPS

Most object storage providers (e.g. Amazon S3, Azure Blob storage, Google Storage...) have the same cost models :

- $25 per TB x month,

- $0.005 per 1000 PUT requests.

- $0.0004 per 1000 GET requests,

The last two points are too often overlooked.

Let's have a look at what they mean for Quickwit.

Quickwit will run 1 PUT request every time you add a split. With a commit_timeout_secs set to 30s, you should expect less than 200,000 PUT requests per index per month (including merges). In other words, each index with a single pipeline will cost us around $1 per month.

This is usually not an issue manageable.

The cost associated with GET requests on the hand can become a problem. A single request will typically emit half a dozen GET requests per split. One split is about 10 million documents.

For a reasonable index of 1 billion documents, a single query could induce 600 GET requests, which would cost $0.00024 per request.

Whether this is a lot depends on the number of queries per second (QPS) you need to address.

For an index of this size, Quickwit is cost-efficient if you have less than 0.1 QPS.

At this point, you will pay $60 just to run GET requests.

With introducting local disk caching for split files in Quickwit 0.7, we have unlocked the potential for higher QPS search use cases.

Several users reported impressive QPS on moderate datasets of 100 million / 1 billion records, and we also made our own benchmarks and reached 300 QPS with one instance. This opens up new possibilities across various domains, including financial services with large transaction datasets. We are eager to see new use cases on this front.

Overall improved search performance

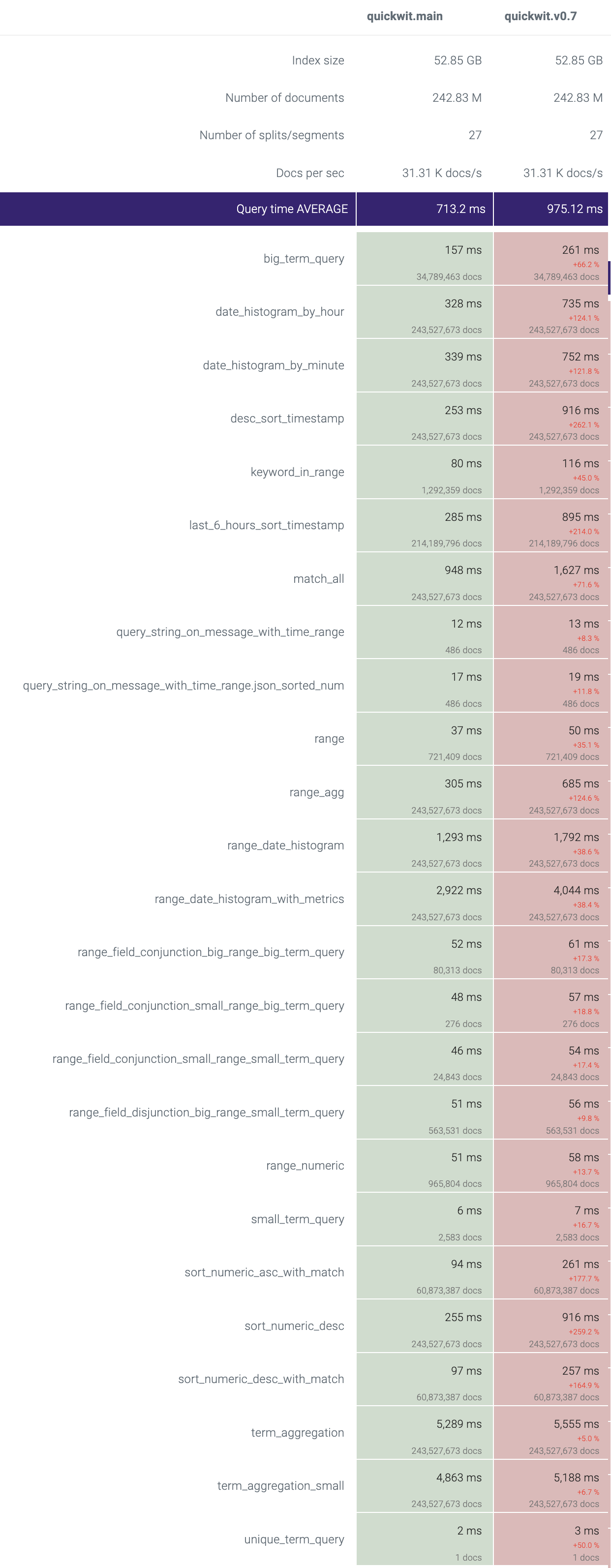

Version 0.8 includes several extensive search optimizations affecting all types of queries. Regardless of your workload, you can expect a significant performance improvement by upgrading.

Here is a breakdown of our internal benchmark, comparing Quickwit 0.7 and Quickwit 0.8. We hope green is your favorite color.

Improving the search experience with Grafana

In Quickwit Grafana Plugin 0.4.0, we added several features to enhance the search experience. The main ones are:

- Autocomplete hint on field names and values. This can be critical especially if you have unstructured data with field names that you don't know.

- A new context query editor to explore before and after a pinned log line.

- Adhoc Filters support: this is very handy when you want to filter all the panels of your dashboard easily.

Quickwit Grafana plugin 0.4.x will require you to use Quickwit 0.8.

Find more details in the GitHub repository.

Distributed ingest API and cooperative indexing

One more thing... We have worked with several partners on a new distributed ingest API. It is now in beta, and we are looking for feedback from our users. This new ingest API, coupled with some work around distributed and local scheduling, is designed to efficiently handle a large number of indexes. So far, our largest use case runs 3,000 indexes on a cluster with 10 indexer nodes with 10 vCPUs each.

It is a game changer for multi-tenant use cases. We are already working with several PaaS to back their tenants' log search. If you want to test Quickwit for such a service, get in touch with us to build a partnership.

To get a taste of the feature, you can activate the distributed ingest API by setting the QW_ENABLE_INGEST_V2 environment variable to true. To activate the cooperative indexing feature, you need to set enable_cooperative_indexing to true in the Quickwit config file:

indexer:

enable_cooperative_indexing: true

Coming next

Shipping the distributed ingest API in the 0.9 is our top priority. We have also started working on index configuration and schema update, as well as the support of OpenSearch Dashboards.

We are also cooking exciting partnerships with cool cloud providers to power their log services. We can't say more for now but we are very excited about it and we will keep you posted.

And if you are at Kubecon EU next week, we should meet there! We are sponsoring the great Kubetrain party, drop me a message on Twitter or LinkedIn if you want to meet.

Happy searching!